The challenge

Organizations were stuck between speed and accuracy.

Utilities: A major UK utilities provider had just 3 policy reviewers responsible for thousands of safety policies. Generating risk assessment reports took 7 weeks, and with that volume, mistakes were inevitable.

Legal: Lawyers building medical chronologies had to verify every claim against thousands of pages of patient records. One missed detail could affect case outcomes.

Enterprise: Organizations had no visibility into what questions their teams couldn't answer. Knowledge gaps stayed hidden until something went wrong.

The existing product answered questions fast. But speed without trust is useless when stakes are high, and trust without efficiency wouldn't drive adoption.

The design challenge: Make AI both trustworthy AND efficient for organizations where errors have real consequences.

Role: Senior Product Designer (Only Designer at time)

Timeline: Mar 2023 – Feb 2024

Scope: Report generation, citation & fact-checking systems, analytics

Team: Worked closely with the CEO, CTO and a couple of engineers.

How I Approached It

Over the course of the year, I designed a flexible system that let users choose the right solution for their context. Rather than building separate tools for each use case, we created one platform where organizations could:

- Generate reports with section level citations for compliance and safety documentation

- Build and verify medical chronologies with deep fact checking for legal teams

- Surface knowledge gaps through analytics that revealed what the organization couldn't answer

The same underlying trust architecture powered all three. Users selected what they needed based on their workflow.

| Context | Problem | Solution |

|---|---|---|

| Utilities | 7-week reports, error-prone process | Report generation with section-level citations |

| Legal/Medical | Thousands of pages to verify | Deep citation linking + fact-checking workflows |

| Organization-wide | Organization knowledge gaps | Analytics revealing what can't be answered |

Understanding the Trust Problem

Working directly with enterprise clients, I learned trust isn't one thing. It depends on context:

| Context | What trust means | Verification needed |

|---|---|---|

| Quick lookup | "Is this roughly right?" | Light: glance at source |

| Safety procedure | "Could someone get hurt if this is wrong?" | Medium: verify specific claims |

| Legal or medical | "Could this affect a case outcome?" | Heavy: audit trail for every fact |

| Generated reports | "Can I stake my reputation on this?" | Complete: section by section verification |

One product. Four different trust models. I had to design a system flexible enough to handle all of them.

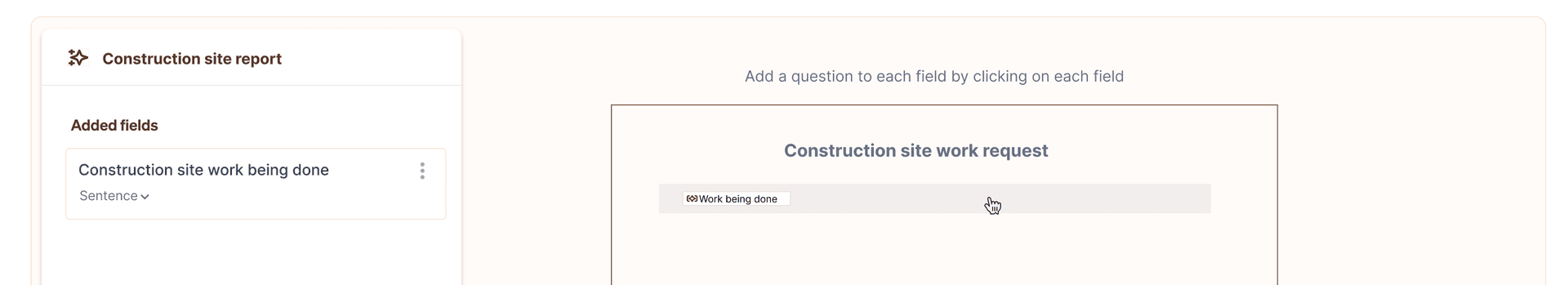

Solution 1: Report Generation for Enterprise

The Challenge

A major UK utilities provider needed risk assessment reports. Their manual process:

- Gather documentation across departments

- Cross reference safety policies

- Write structured reports with proper citations

- Time: 7 weeks

They didn't need chat. They needed complete documents generated from their knowledge base with the same trust model as manually written reports.

The Decision

The core tension: Automation vs. control.

Fully automated reports would be fast but users wouldn't trust output they couldn't verify. Too many review steps would kill the efficiency gain.

I chose template based generation with section level citations. Users select a report type, the system generates structured output, and every section links to sources. Users verify what matters to them. Not everything, but anything.

What I Designed

A four step workflow that balanced automation with control:

- File selection: Users choose which documents to pull from for the report

- Template mapping: Users select an existing template or create their own. Each field maps to a question Humata needs to answer, with constraints to guide accuracy

- Report generation: Save the template and generate. The system pulls answers from the selected documents with citations for each field

- Human audit: Review the generated report and verify policy citations where needed

Outcome

7 weeks → minutes. Same trust model. Radically different efficiency

Pilot Results

| Metric | Outcome |

|---|---|

| Documents indexed | 1,696 |

| Queries answered | 597 |

| Accuracy | 98% |

| Response time | 3 seconds |

This level of measurement was critical. In high stakes contexts, "98% accuracy" isn't enough. Users needed to understand exactly where and why the system could fail.

Earlier in my time at Humata, I designed an insights system with the engineering team that tracked common questions and identified gaps in the knowledge base. This became the foundation for improving accuracy over time. When we saw patterns in what users were asking and where the system struggled, we could target those areas for training.

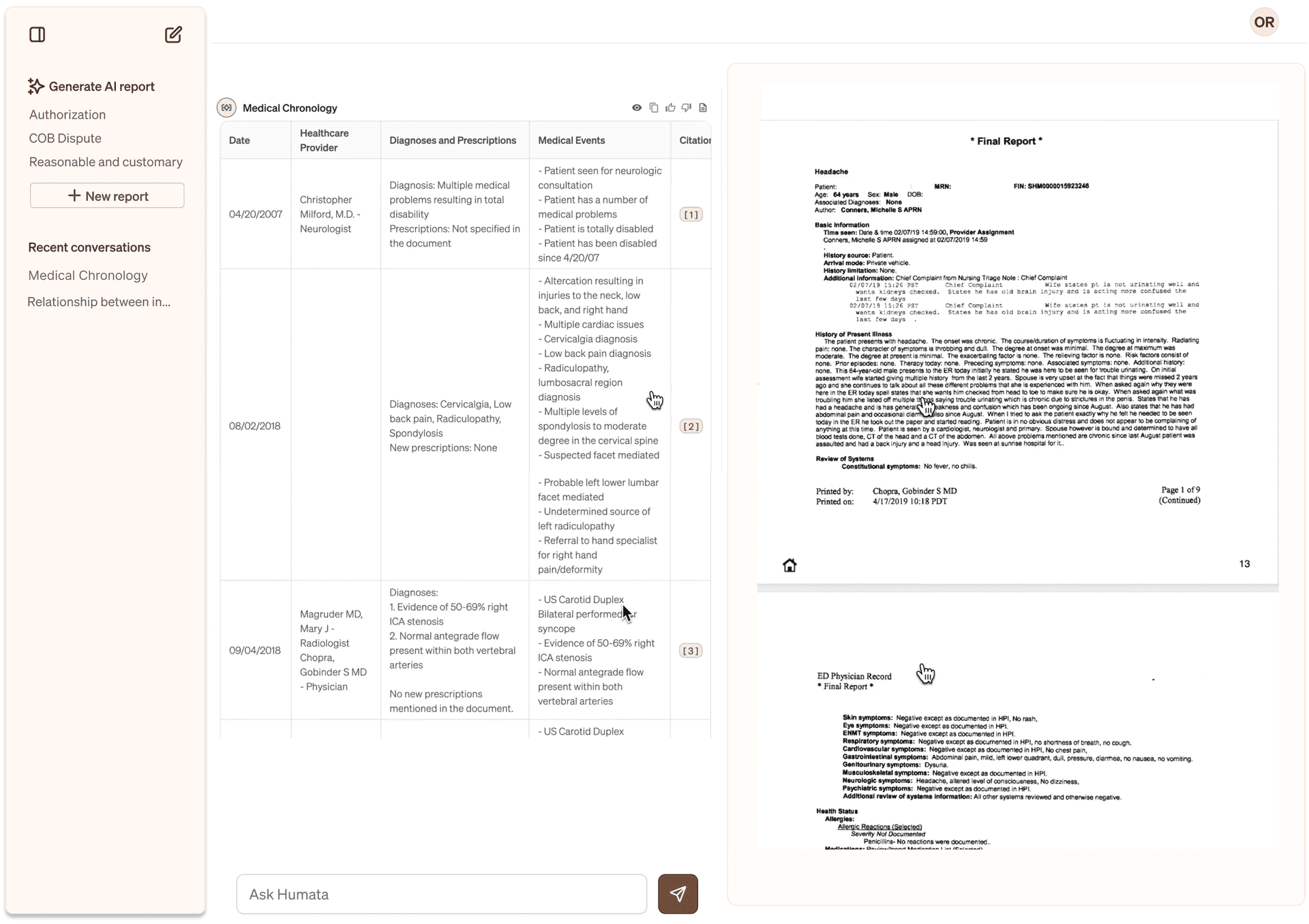

Solution 2: Fact Checking at Scale

The Challenge

Legal teams building medical chronologies from patient records faced a different problem. They weren't generating reports. They were verifying claims against thousands of pages of charts.

Medical chronologies are brutal. A single case can involve thousands of pages of patient records, and lawyers need to verify every claim. Miss one detail and it could make or break a case in court. The manual process took weeks, and the stakes couldn't be higher.

The Decision

The core tension: Citation density vs. usability.

Heavy citations on every sentence would make the interface unusable. But legal professionals needed complete audit trails.

Working closely with an in house lawyer and the engineering team, we chose deep citation linking with context aware density. Every claim links to the exact passage, not just the document, but the specific paragraph. For legal or medical contexts, the system surfaces the full source trail. For casual lookups, citations stay light.

What we Designed

- Deep citation linking: one click shows exact source passage highlighted

- Fact checking workflows: mark claims as verified, flagged, or needs review

- Medical chronology support: aggregate patient records into a timeline of conditions, allowing lawyers to quickly link a case to an injury or identify false claims

Outcome

Weeks → minutes. Lawyers could now create and review medical chronologies in a fraction of the time, with every claim linked to source records. The same work that used to take weeks of manual review was done in minutes, with full confidence in the citations.

The Insight

Trust and efficiency aren't tradeoffs. They enable each other.

Users won't adopt AI that's fast but unreliable. And they won't use verification tools that slow them down. The unlock was designing verification that feels effortless, so seamless that users trust the system even when they don't check every citation.

At the end of the day, users being able to trust the data is what drives adoption. Without trust, the product sits unused. With trust, it becomes part of how organizations work.

That's the design lesson: trust isn't about perfection. It's about transparency that doesn't get in the way.